Introduction

Data Centre Migrations often involve setting up temporary network connections to facilitate the migration of workloads from the old Data Centres to the new, let’s call them “migration links”. The network teams will need to set up routing to allow migration traffic to flow across the temporary links. The goal is obviously to have connections that have end to end bandwidth of the migration link, or at least close to it, so if you have a 1Gbs temporary link you would like to have the bandwidth of that link available from end system to end system. Often the temporary network hardware is put in some sort of DMZ, at least at the source end. Migration traffic will need to traverse the existing network in the source DCs to get to the migration link. This is not always trouble free and in my experience most DCMs experience some bandwidth issues with some subset of servers. My war stories have included some traffic being routed via an old and forgotten firewall with only 100Mbps interface, Routers and switches with auto-negotiate/hard settings mismatches, faulty cabling, faulty NICs etc. The result in all these cases was unexpectedly low throughput for the migration of some servers. Sometimes the migration tool you are using may give you effective bandwidth figures. Having said that it is useful to have a tool that generates network load and gives you an accurate throughput figure end to end. For me more often than not that tool is “Iperf”. Iperf is an open source tool. It is widely used and trusted in the network performance measuring space.

Getting Iperf

Iperf has gone through 3 major versions. The latest version Iperf3 is a complete re-write of the tool. Version 3 is developed principally by ESnet and Lawrence Berkeley Labs. Their Website can be found here . However, note that this site only hosts source code distributions of iperf which you will need to build into binaries yourself. A significant number of pre-built binary packages for Windows and Linux can be found at the iperf.fr site here. There are also ports around for Solaris, AIX and HP/UX, although none are iperf version 3 as far as I have been able to ascertain.

Running Iperf

iperf uses a client server model. You will set one system up to run as an iperf server and the other to run as a client. Usually it does not matter which way round you do this. Although iperf3 has a GUI of sorts the traditional way to run iperf is from the command line. To start a server running you use the command (assuming we are using iperf3)

Iperf3 –s –f M

The –s flag tells iperf to run in server mode. The optional –f M flag tells iperf to report its statistics in Megabytes. The server will then sit waiting for connections. Iperf 3 uses port 5201 by default. You will typically have to open this port in the Windows Firewall or iptables on Linux to allow iperf to work. Also if you are going to be traversing network firewalls to get between your iperf server and iperf client you will need to get rules added to those firewalls.

To run the client end you issue the command

Iperf3 –c –f M <ip-or-dns-name-of-iperf-server>

Usually no firewall rule changes are required on the client OS itself

What is normal?

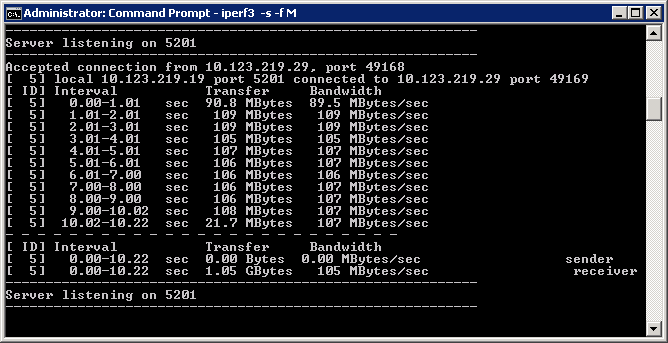

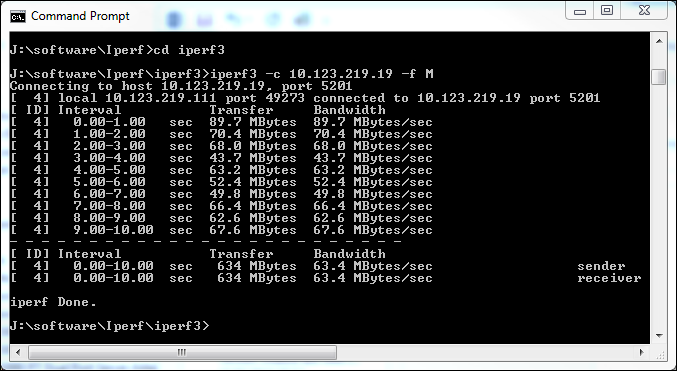

OK so you have run Iperf between a pair of servers and obtained some results. How do you interpret them? Well that is not quite so easy. So for Gigabit Ethernet various folks, including vEXPERT Rickard Nobel have published some theoretical calculations. Rickard works out that Gigabit Ethernet could achieve a maximum throughput of 118 MBps (see his article here) Using two different ESXi servers in our lab I ran an iperf test between two Windows 2008 R2 servers. The two servers were on the same VLAN and both were connected to the same Cisco SG300 switch. Here is what I got, note that all the results are in Megabytes per second (MBps) not Megabits per second (Mbps).

The result was a transfer rate of 105 MBps, not bad at all for a real world test and very close to the theoretical 118 MBps. But actually it was not quite so real world as I ran the test on a weekend when nothing else was going on in our lab, but still this is a test on real hardware rather than a theoretical calculation. OK so the two servers were attached to the same switch which is not what you are going to have in a DCM scenario but nonetheless this is a useful upper limit for what you can expect. For a DCM when you take into account passing through multiple switches, routers and firewalls and the fact that other types of traffic may be contending for the NICs and links I reckon that 35-60 MBps is not an unreasonable “normal” transfer rate.

What’s bad?

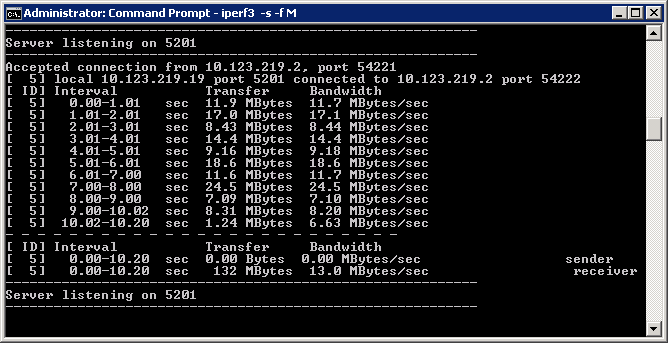

So when do you know you have a problem? You want bad? Let me show you abysmal.

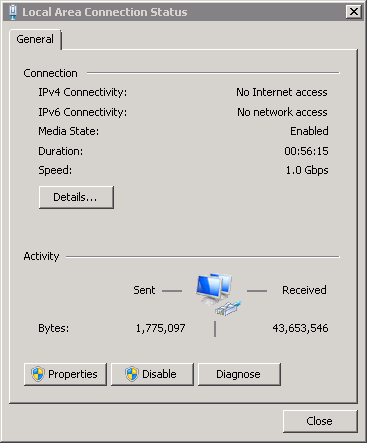

This is the transfer rate from one of our recently configured test desktops talking to a VM on one of our ESXi servers, it turns in 13 MBps just 12% of the performance of our earlier VM to VM test using different ESXi hosts. Again the desktop and the ESXi server are on the same VLAN and connected to the same Cisco SG300 switch (well actually I re-plugged the desktop to the same switch the first time I got this terrible result but it didn’t make any difference). I tested the cable by unplugging it from the desktop and substituting my laptop and running another iperf test. That came out at 106 Mbps, so the cable was OK (and everything else in the chain) In this case we were re-using some older high end ASUS motherboards for the test desktops with on board Marvel Yukon 88E8056 NICs. These NICs are supposed to be Gbe NICs and indeed that is what had been auto-negotiated with the switch. First port of call was to get the latest driver from the ASUS site. Did that fix it? Unfortunately not, there was no significant difference. There is some bad press on the Web regarding these embedded NICs so the next step was to reconfigure and Install an Intel PRO/1000 PT PCI-e card In the desktop (we use these in all our ESXi servers and have found them to be reliable and offer good performance, plus they are cheap!) That changed things significantly.

Re-running the iperf test now gave a throughput of 63 MBps, close to a 500% improvement on the 13MBps. It’s true this is still a lot less than the 105 MBps that I got between ESX servers but this is on a fairly old motherboard and will be constrained by PCI-e bus performance.

Giving 110%

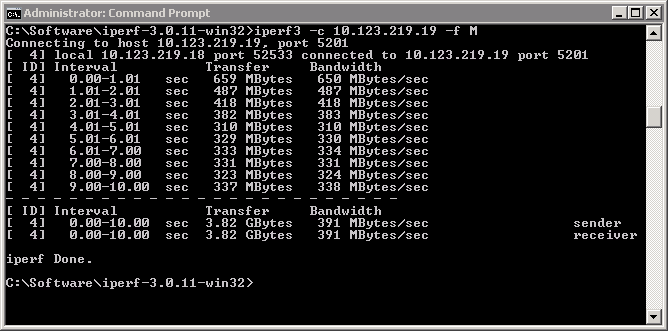

In the example below I have run iperf between two Windows 2008 R2 server VMs on the same ESX server. So notionally these two servers have 1Gbps NICs. So in the physical world that would mean the maximum throughput would be 118 MBps or probably around 105 Mbps as per my earlier tests.

However, what I actually got was much more spectacular. This VM to VM test turned in a throughput of 391 MBps. That is over 300% more than the Gigabit Ethernet maximum transfer rate.

Why is this? Well of course it is because the networking between these 2 VMs is purely software running on the ESX server and the transfer never goes off host to physical hardware. It also shows that although VMware presents the vNIC as notionally1 Gigabit it makes no attempt to enforce that speed and allows it go as fast as it is able to.

Conclusion

Iperf is a useful tool to trouble shoot connections you think may be experiencing throughput problems. You can also use it proactively as part of you migration technical roll out. Before you even start to use migration tools you can use Iperf to test the throughput between source and target servers. Because Firewall rules may be in place for your tools and not Iperf you may want to alter the port Iperf uses to say the same port as used by your migration tooling.